redis and cache

Redis 系列(二): 连集合底层实现原理都不知道,你敢说 Redis 用的很溜?

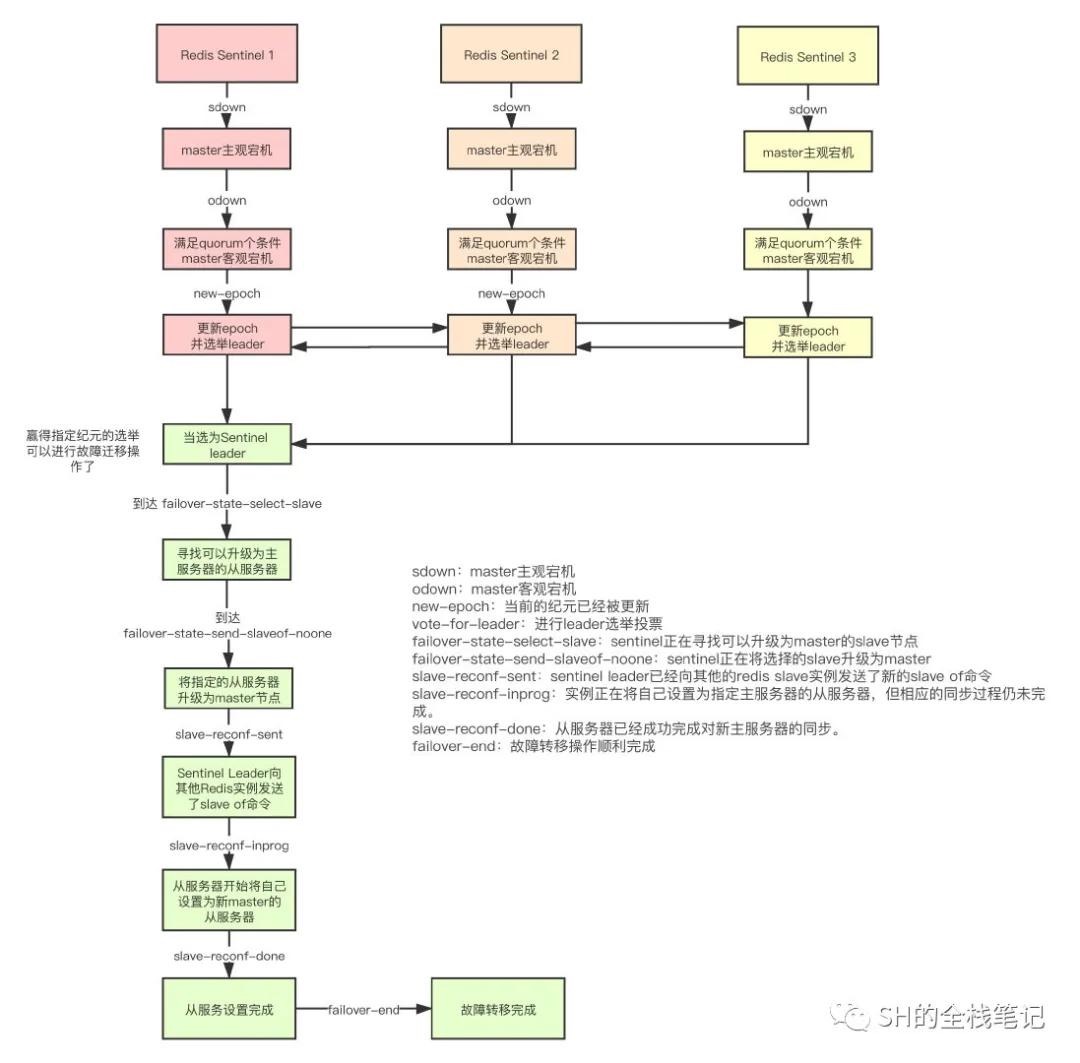

Redis进阶 - 高可用:哨兵机制(Redis Sentinel)详解

high performace

- 多线程?

- 提高数据处理速度: read,decode,write,

- 折中的master-worker,总体看还是单线程

mechanism

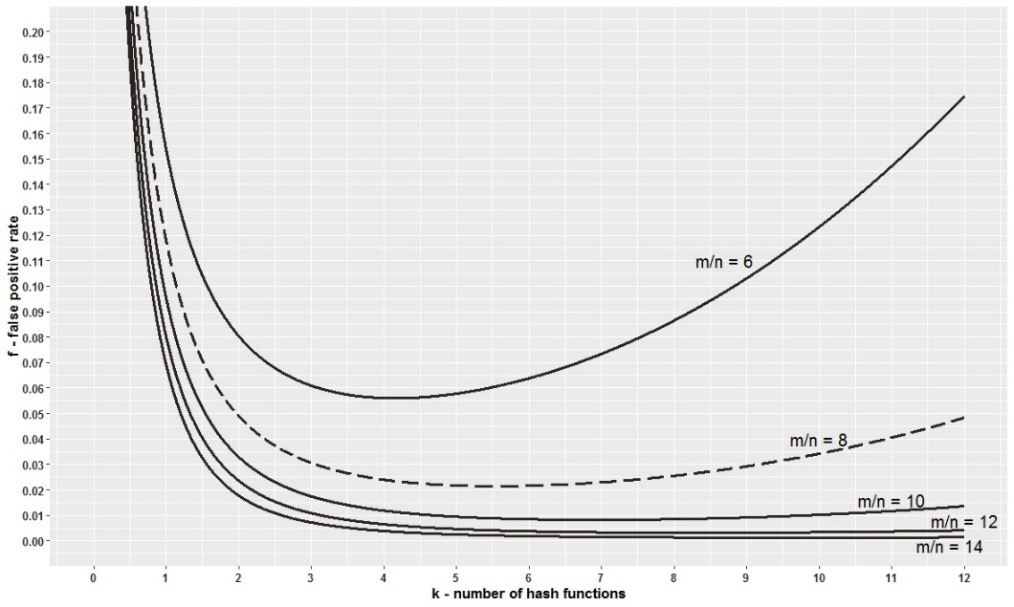

basic structure

dbList: bList:[redisDB1,redisDB2,…]

dbList: bList:[redisDB1,redisDB2,…]

store form:

- value dict: dict[stringRedisobj] = some redisObj

- expire dict: dict[strinngRedisObj] = expire time

reids object: econding: underlying implement

|

|

high performace

why have high performace:

- 多路复用(select or epoll: 高效处理tcp连接;

- High performace DataStruct;

- in-memory store ;

- single thread:no locker cost

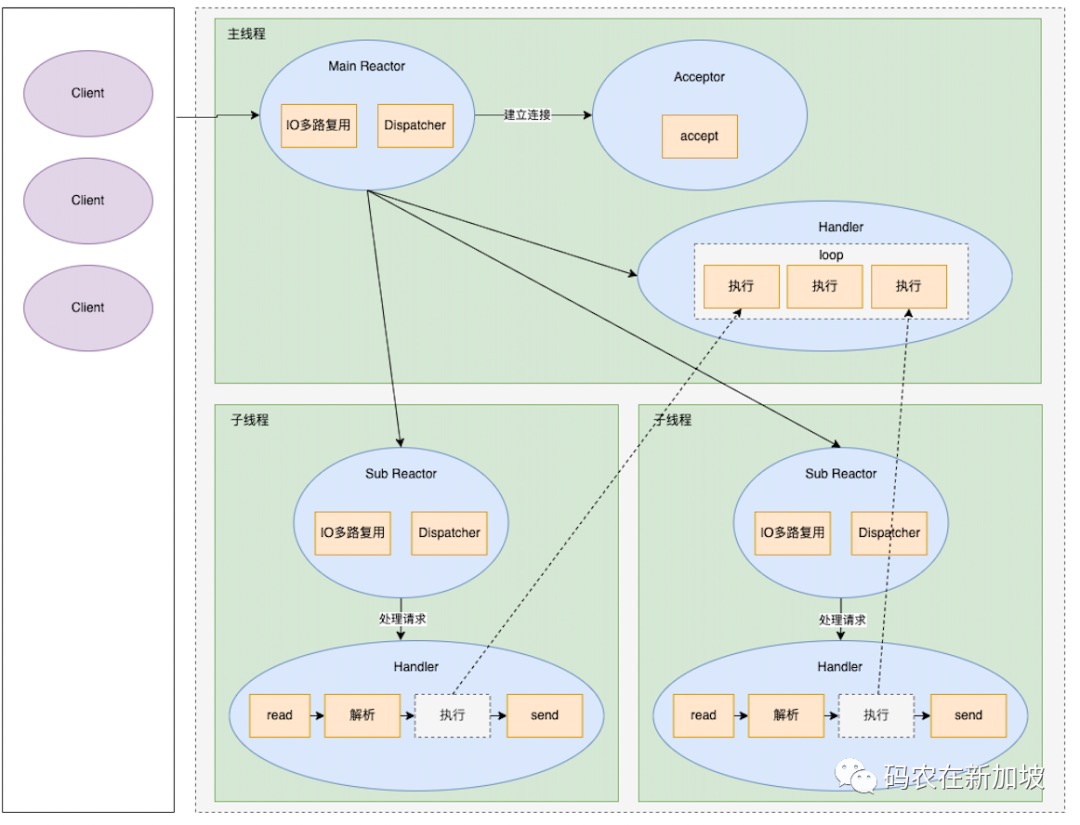

mutiple thread

why single thread: not cpu-bound, i/o bound and memory bound

but why use mutiple thread now: improve i/o, simple version of multi reactor; child thread work: read/write socket ;

zipList

- what? 动态数组

- feauture:

- 元素大小可变,节省空间;

|

|

| 域 | 长度/类型 | 域的值 |

|---|---|---|

| zlbytes | uint32_t | 整个 ziplist 占用的内存字节数,对 ziplist 进行内存重分配,或者计算末端时使用。 |

| zltail | uint32_t | 到达 ziplist 表尾节点的偏移量。 通过这个偏移量,可以在不遍历整个 ziplist 的前提下,弹出表尾节点。 |

| zllen | uint16_t | ziplist 中节点的数量。 当这个值小于 UINT16_MAX (65535)时,这个值就是 ziplist 中节点的数量; 当这个值等于 UINT16_MAX 时,节点的数量需要遍历整个 ziplist 才能计算得出。 |

| entryX | ? | ziplist 所保存的节点,各个节点的长度根据内容而定。 |

| zlend | uint8_t | 255 的二进制值 1111 1111 (UINT8_MAX) ,用于标记 ziplist 的末端。 |

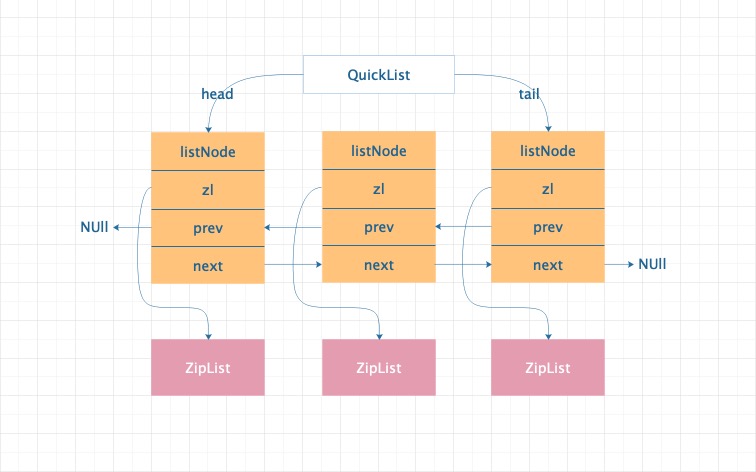

quickList

vs linker: reduce alloc time vs array: reduce moving cost

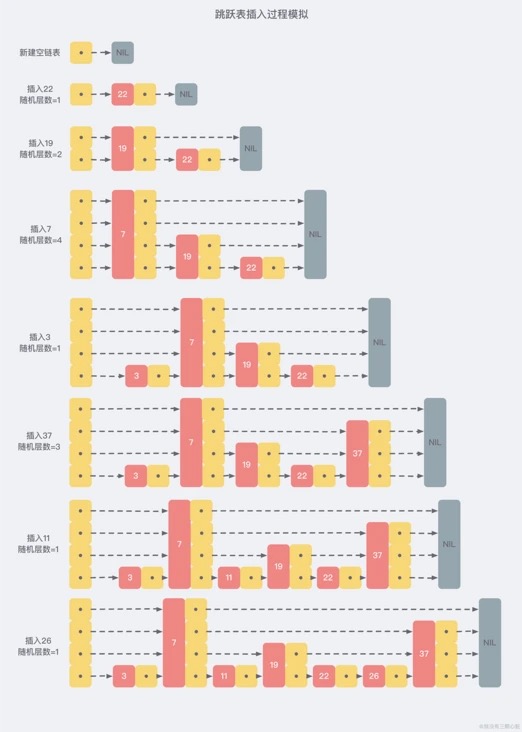

skipList

what: order linker list with mutiple next pointer

simple code:

|

|

|

|

- vs avl tree;

- 范围查找更方便

- 更简单: 不需要为了平衡而进行旋转操作

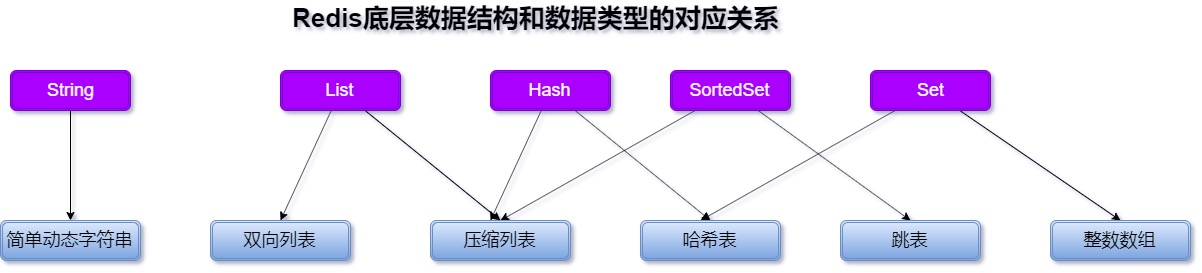

Data Types

string

sds,simple dymanic sting:

|

|

sds feature:

- 二进制安全: c会被\0截断; 而 sds通过len能获取完整长度;

- 高效append: 通过预先分配free空间;减少ReAlloc次数;

sds code:

|

|

list

how: quickList

use case: store data sorted by added time

|

|

hash

structure :

reidsKey field1 value1, field2 value2 ....

use case:

- record some item info

|

|

- increase field count

|

|

implement:

- zipList

- hash

set

use case:

- remove duplicated things

|

|

how it work:

- hash

- 整数集合

zset

what: sort set also act as a simple table:

|

|

use case:

- rank

select from rank order by score asc limit 3 offset 3update set score=score+n where id=xxx

|

|

- count number in score range

if score=timestamp, slide window

select count(*) from table where score>=a and score<=b

|

|

how it:

- zipList

- skipList

cache problem

what:

- cache not work

- big pressure on db

types:

- all: cache avalanche

- partly: 1. 击穿(hotspot invalid): hot keys(one or some) invalid; 2. 渗透(cache penetration): same keys always query from db,

how:

- cache avalanche:

- before: high availablity: master-slave or clusrter architectue

- in progress: 降级,限流

- hotspot invalid:

- expired time:

- don’t have expired time: configure data;

- set different expired time;

- add Locker when access hot key;

- expired time:

- cache penetration:

- valid data;

- bitMap or blomfilter to cache existing data;

cache consistency

what: cache data and mysql data not consitent

optianl solution:

- update cache, then update db: 🚫 if update db fail, must retry

- update db, then update cache: 🚫

|

|

best solutiion: update mysql, then delete cache

why: update db then update cache: will be overwrte by older process

|

|

write behind;

cache strategy;

-

cache aside(lazy loading): read: client-> cache->db; update: client->db, client->cache[delete];

-

read/write through: 由代理管理数据库和cache; client -> proxy->{cache,db}

-

write behind caching;

client -> cache; then after a time: cache -> write db;strategy:

- interval: after x sconds;

- lazy: after updating;

problem: 一致性降低; 策略:

- 提高cache 稳定性;

- 只从cache读取;

bigData

-

基于位的存储

- bitmap

- blomfilter:

-

基于概率的存储

- hyperloglog

bitMap((bitArray)

-

what? array of bit

- int value = array index;

- bitarray[arrayIndex]=1

-

feature?

- 适合存储分布均匀的整数;

- 能还原实际值;

-

use case?

- userID is autoIncrement

roaring bitmap

- what? bitmap 改进版,在存储少量数据的时候节省空间

- how? 空间进行分割: [1]:int16 arrary or bitArray [.]: [.] [2^16]

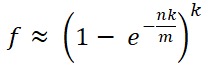

bloomfilter

-

what? bitmap(array)的应用

- index = hashCode%bitLength

- store multiple index;

-

how? value1-> hashValue1%bitarrayLength=bitArrayIndex1; value1->hashValue2%bitarrayLength=bitArrayIndex2; if bitArray[bitArrayIndex1]=true && bitArray[bitArrayIndex2]=true; data exist

-

占用空间?2^32数字占用空间

when k=4; m/n(totalBit/totalEle)= 10~20 false positive: 0.01 ~0.001

-

use case

hyperloglog

persistance data

types

aof:append only file; command list

rdb: redis database; snapshot of memory

recover: if aof enable, load aof file frstly

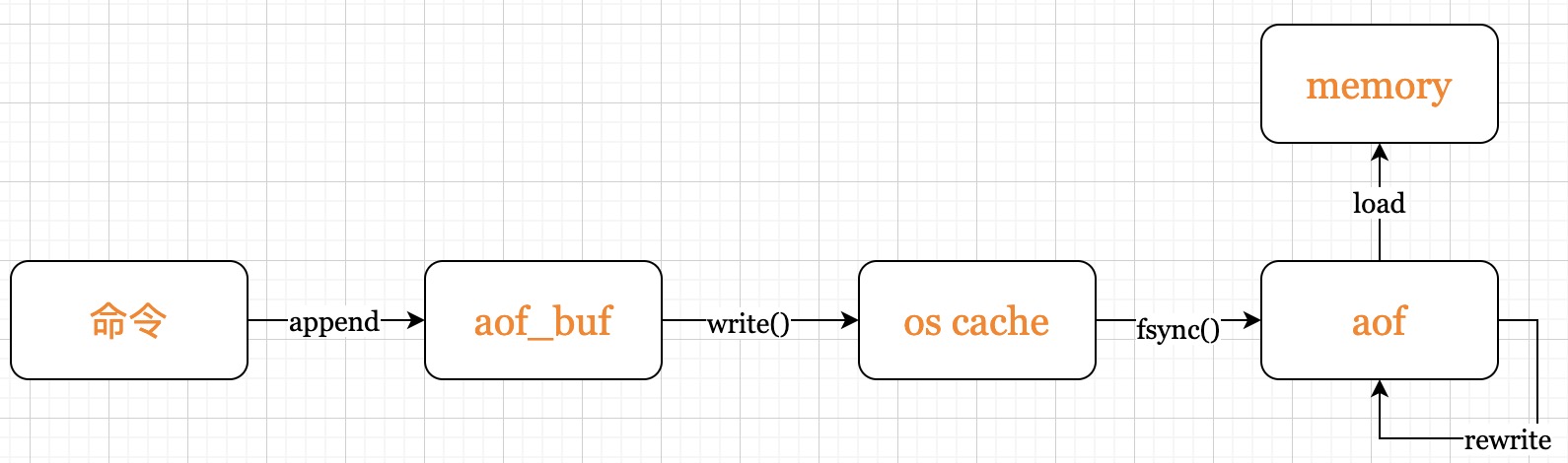

aof

config:

|

|

appendfsync: when flush to disk

- everysec

- always: every write command

- no: controls by os;

how:

|

|

pros: less data loss; cons: big file and more recover tim

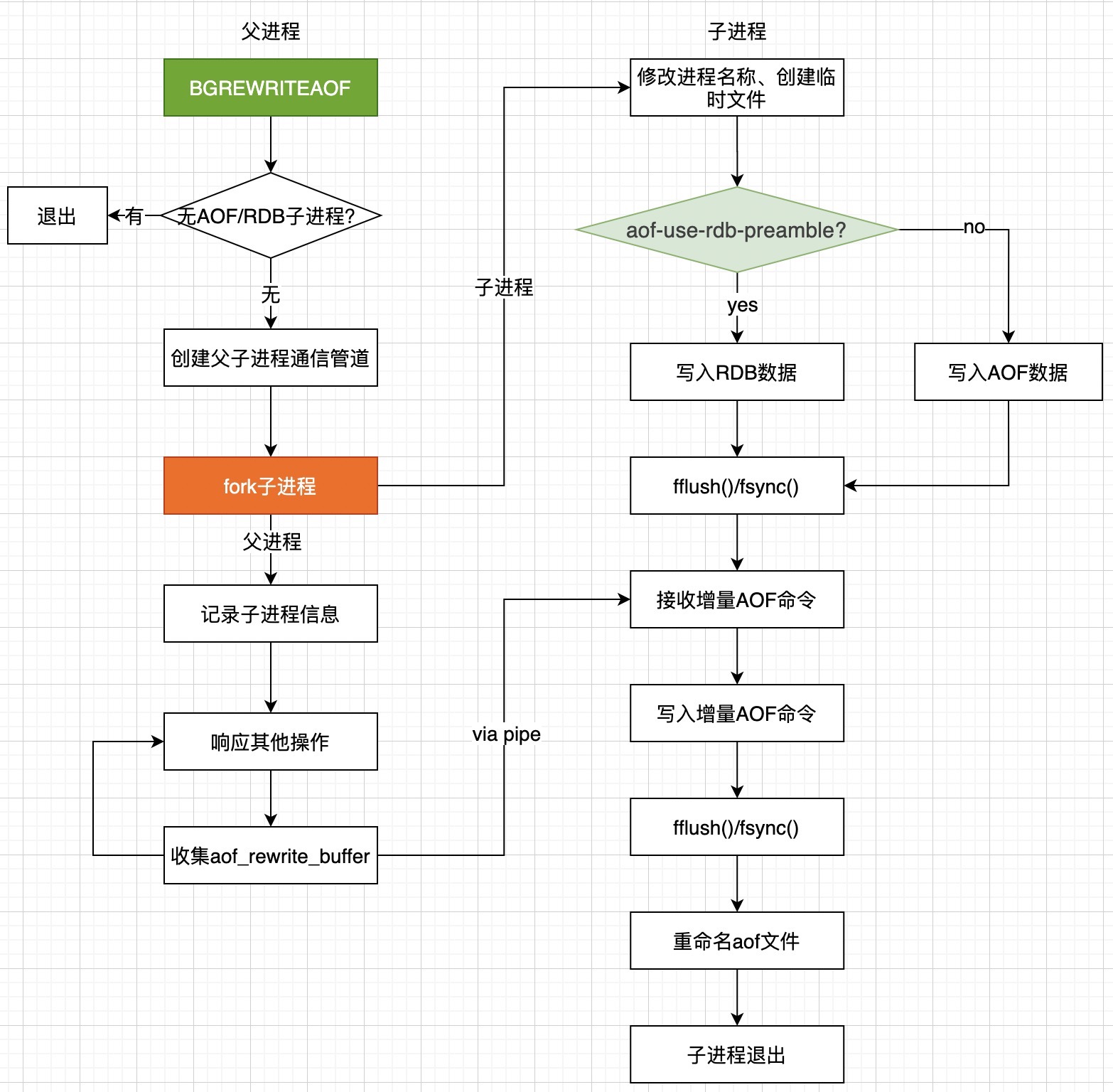

rewrite aof

what: merge the commands;

config:

|

|

how:

|

|

![[Pasted image 20221115052856.png]]

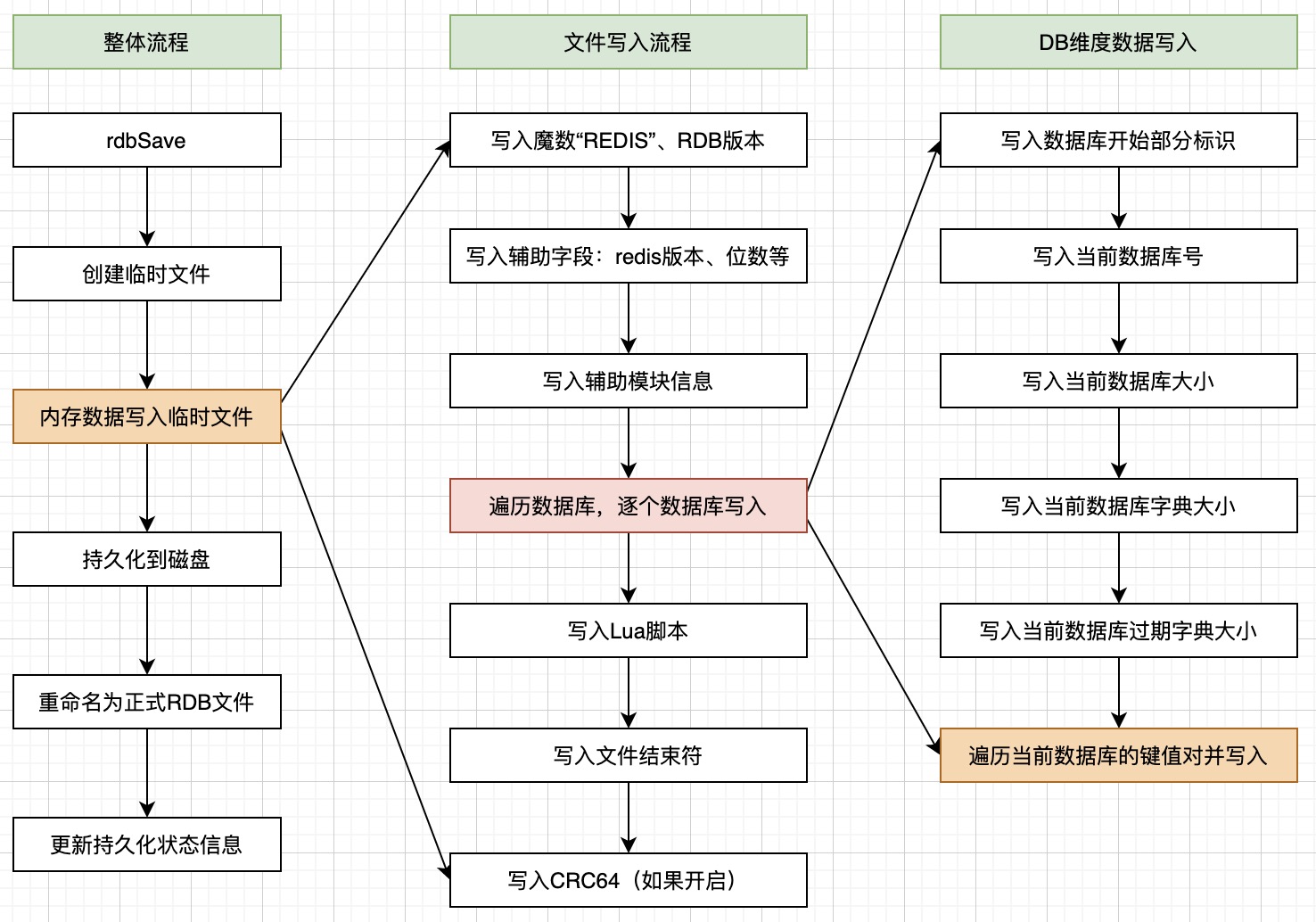

RDB

config:

|

|

in 60s, 900 keys have changed

how:

|

|

pros:

- compact and less recover time cons:

- more data loss

eviction and expire

eviction: evict data when memory reach the max memory

|

|

eviction types:

- noeviction: default

- volatile data

- volatile-random:

- volatile-ttl

- volatile-lru

- volatile-lfu

- all keys

- allkeys-random

- allkey-lru

- allkey-lfu

expires: remove expired keys

how: lazy-expire and auto

|

|

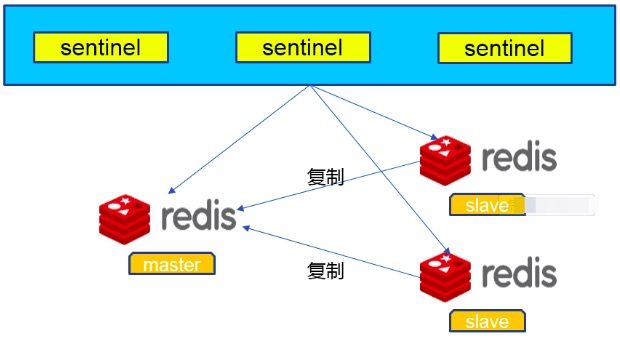

replica

replication: 手动故障转移

|

|

sentinel: automic failover

|

|

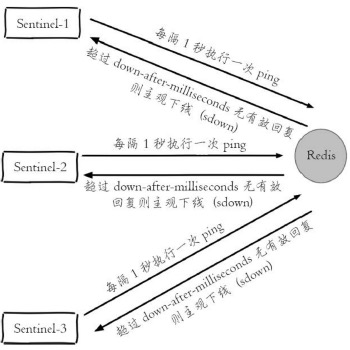

how:

how:

- send heartbeat to check health

- heartbeat fail:

- client send sentinel is-master-down-by-addr: get vote > quorum, 客观下线

- client send sentinel is-master-down-by-addr: get vote > quorum, 成为leader

- leader do failover