- know the basic knowledage of promethueus

- create a basic server to monitor redis

1. what is ?

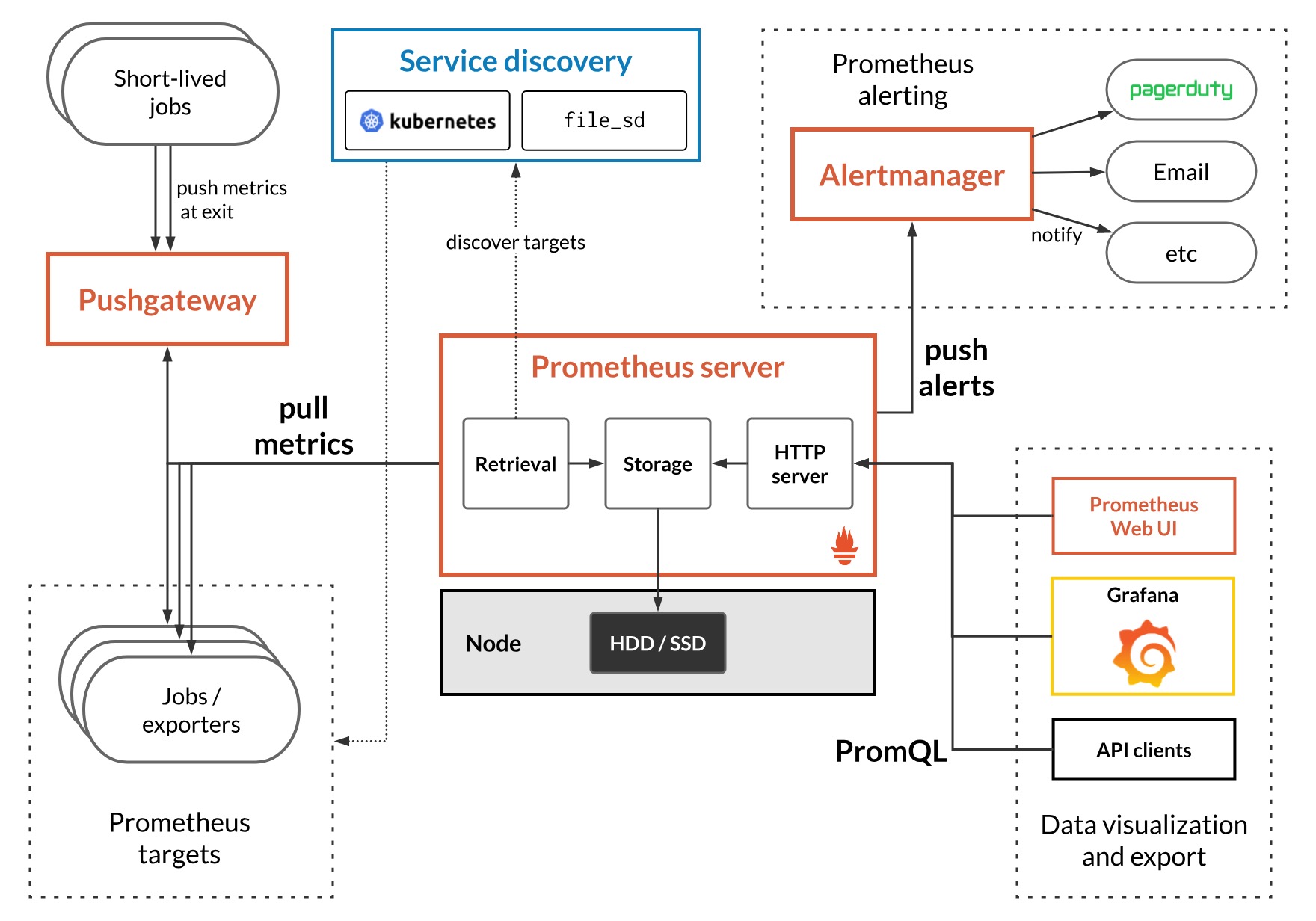

prometheus:

- prom: 主要是存储采集的数据

- exporter: 采集数据

- grafana: 前端,展示数据

exporter:

- 采集和转换数据

- 不存储数据,只有 metrics 调用时候才采集 和转换

Time Series Data

what:

- 相比mysql, 只有 2-3 列, key, value, timestamp

- 数据是基于timestamp 产生的

- 适用于监控

Prometheus time series data:

- meteria, label: 相当于 key

- value

- timestamp

1

2

|

- `http_requests_total{method="GET", status="200"} 1023 @ 1670000000`

- `http_requests_total{method="POST", status="200"} 978 @ 1670000000`

|

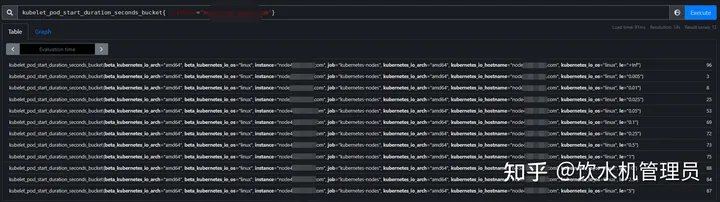

promql

语法:

1

|

function(metric{lable})[time range ]

|

basic:

- request_total: 瞬时值; 当前最新的值;

- request_total[5m]: 区间值, 5m 所有上报的值

聚合操作

- sum

- min

- count

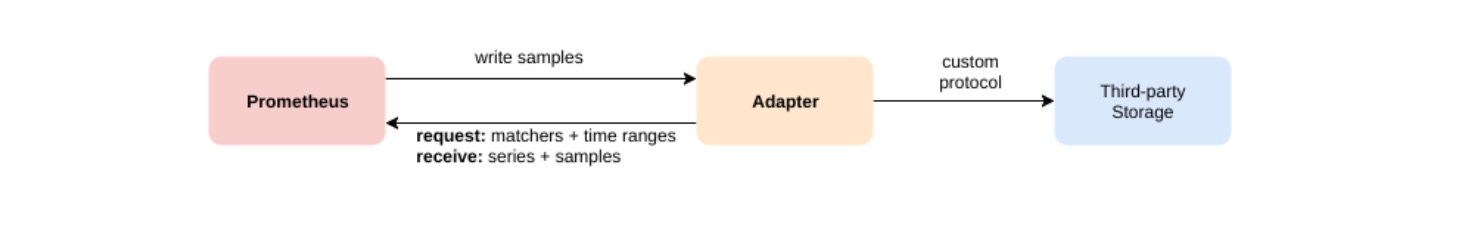

storage

can store in a remote storag;

config

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

metrics_path: /metrics

scheme: http

static_configs:

- targets: ["localhost:9090"]

|

metadata;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

{

"discoveredLabels": {

"__address__": "localhost:9090",

"__metrics_path__": "/metrics",

"__scheme__": "http",

"job": "prometheus"

},

"labels": {

"instance": "localhost:9090",

"job": "prometheus"

},

"scrapePool": "prometheus",

"scrapeUrl": "http://localhost:9090/metrics",

"globalUrl": "http://f9cdf3cbf0ac:9090/metrics",

"lastError": "out of bounds",

"lastScrape": "2021-07-26T15:03:04.589192767Z",

"lastScrapeDuration": 0.0173774,

"health": "down"

}

|

get http:localhost:9090/metrics;

1

2

3

4

5

6

7

8

9

10

|

- job_name: baidu_http2xx_probe

params:

module:

- http_2xx

target:

- baidu.com

metrics_path: /probe

static_configs:

- targets:

- 127.0.0.1:9115

|

get 127.0.0.1:9115/probe?params=xxxx;

- targeType:

- static

- dynamic

- json file

- dicvoery center;

- targets: the

pomethus

指标类型

在 pomethus 数据库里存储的是 metrics and value and timestamp;

api_request_count: 100; 2023-12-05 11:00:00

在客户端,可以分成几个metrics type

- counter:

- 只会增加;

- 使用场景: 请求数

- 服务重启后会归零

1

2

|

request_count 1 2023-12-05 11:00:00

request_count 1 2023-12-05 12:00:00

|

- gauge:

- 可以增加和减少;

- 可能会少采集数据,需要设置合理间隔;

- 使用场景: 日常的指标

1

2

|

temperuate 20 2023-12-05 11:00:00

temperature 15 2023-12-05 12:00:00

|

Histogram, Summary:

使用三个metric 来暴露一组 historygram, summay

1

2

3

4

5

|

prometheus_tsdb_compaction_chunk_range_bucket{le="25600"} 100

prometheus_tsdb_compaction_chunk_range_bucket{le="102400"} 200

prometheus_tsdb_compaction_chunk_range_bucket{le="409600"} 300

|

1

2

|

request_duration_seconds_bucket{le="0.5"} 2 2023-12-05 11:00:00 request_duration_seconds_bucket{le="1.0"} 5 2023-12-05 11:00:00

|

使用

- increse the counter

- alert if the error

pomethus

the total process:

alert –> router –> receiver.

alert rules

groups:

- 帮助组织rules, 使得更可读

- 不影响routing

1

2

3

4

5

6

7

8

9

10

11

12

|

groups:

- name:

. rules:

- alert: ApiErrorCountIncreased

expr: increase(api_error_count[1m]) >= 10

for:

lables:

servitiry:

annotation:

- name:

rules:

|

lable: 分组和

annotation:

alert manager

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

route:

group_by:

group_wait:

group_interval:

routes:

- mathch:

severity:"eeror"

receiver:xx

- match:

receivers:

- name:

|

rules

alert managrer

route:

what: send alert to

- alert first group by gropy by

- use group rules to when to fire the notificaition

receiver:

define the.

group_wait: 30s group_interval: 5m repeat_interval: 1h

-

group_wait: 1ms: 等待同一组的其他通知1m,看是否有新的通知,有的话一起发出

-

group_interval: 5m: 两个同组的通知至少间隔 5m

-

repeat_interval: 1h: 如果持续触发通知, 则至少一小时后才再发一次

increaese(request_count) > 10[1m];

10:00, 触发, 10:01 等待没有新的同组通知:发出通知

持续到 10:02结束;

10:04:00 新的触发,由于 < 5m,如果持续激活 >=5: 10:07 发出通知

一直持续到 11:04:在发出通知

alert manager config

1

2

3

4

5

6

7

8

9

10

11

12

|

groups:

- name: example_alerts

rules:

- alert: HighRequestLatency

expr: job:request_latency_seconds:mean5m{job="myjob"} > 0.5

for: 10m

labels:

severity: page

annotations:

summary: High request latency of job myjob

description: This alert fires when the mean request latency of myjob is above 0.5s for 10 minutes.

dashboard: http://example.com/dashboard/myjob

|

label: the metadata for a alert,

- routing

- grouping

annotation: added data for notify, used in notificaiton

alert status:

- pending: wait for time

- firing:after pending, not actualy firing, may be wait for in group

- resolved: the metric that cause the alert not exist

group by: