你是一个 go 语言专家,我正在学习go,进对我的总结进行评价并指导,给出一些建议, 并给出你修订后的版本

title: “map”

date: 2020-05-14T11:39:03+08:00

draft: false

tags: [“go”,“hash”]

lastmod: 2023-04-18T16:47:26+0800

Map实现原理分析

深度解密Go语言之 map

由浅到深,入门Go语言Map实现原理

golang map底层实现

哈希表

深入理解 Go map:赋值和扩容迁移

深度解密Go语言之 map

map 的实现原理 | Go 程序员面试笔试宝典

遍历过程 | Go 程序员面试笔试宝典

create:

- 字面量 创建:使用常量创建

- 函数创建: make

1

2

3

4

5

6

|

//--1. init

// 1.literal

var a = map[int]int{1:1, 2:2};

// 2. make

var b = make(map[int]int);

|

basic operation:

1

2

3

4

5

|

delete(m,"1")

for k,v := range m

v, ok =m["1"]

|

how

key pointer:

- hash func

- hash collision: 使用改进版的链表法,节点嵌套数组,减少内存创建和回收的cost

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

index = hashCode % bucklength

if buckets[index].full == no:

buckets[index].append(key,value)

else:

bucket = buckets[index]

for:

bucket = bucket.next

if bucket == nil:

break;

if bucket.full == no

buckets[index].append(key,value)

break;

|

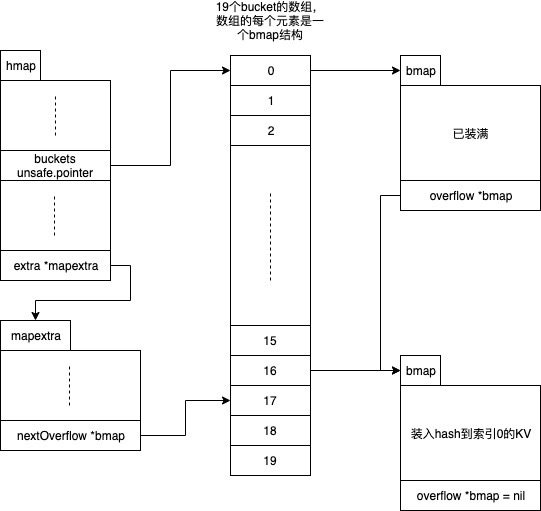

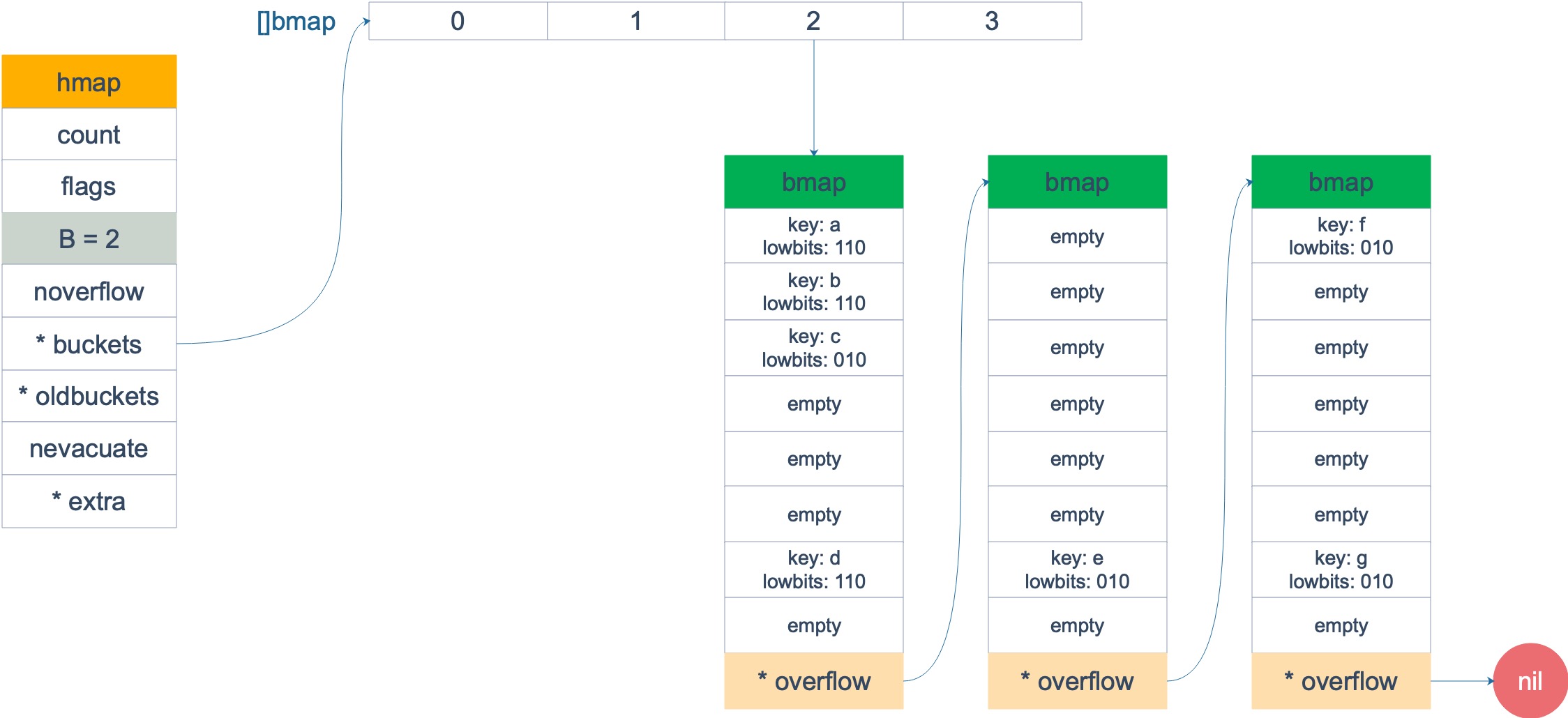

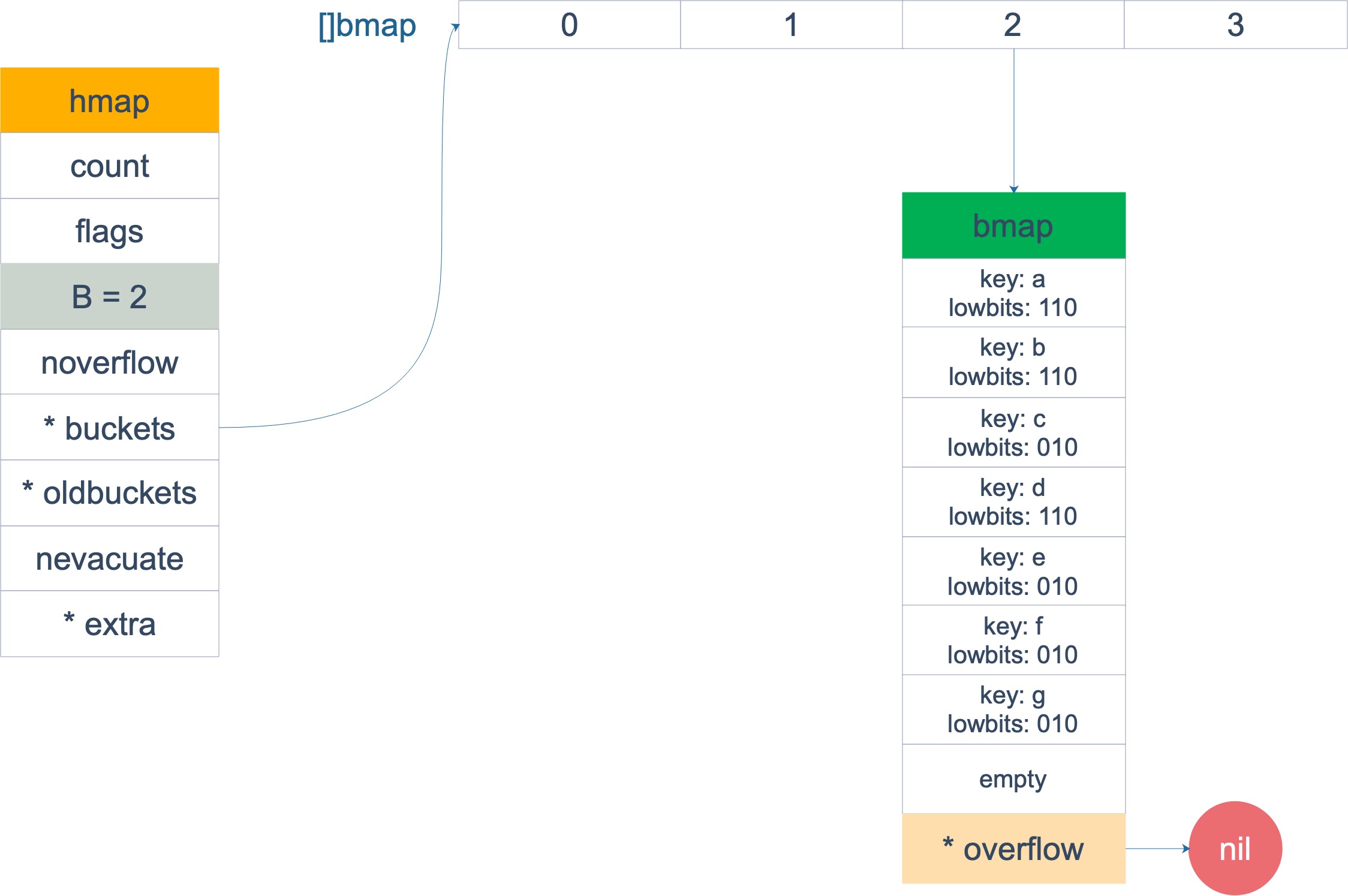

base structure

simple

1

2

3

4

5

6

|

hmap->[]bucket

bucket:

[]key

[]value

*overflowBucket

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

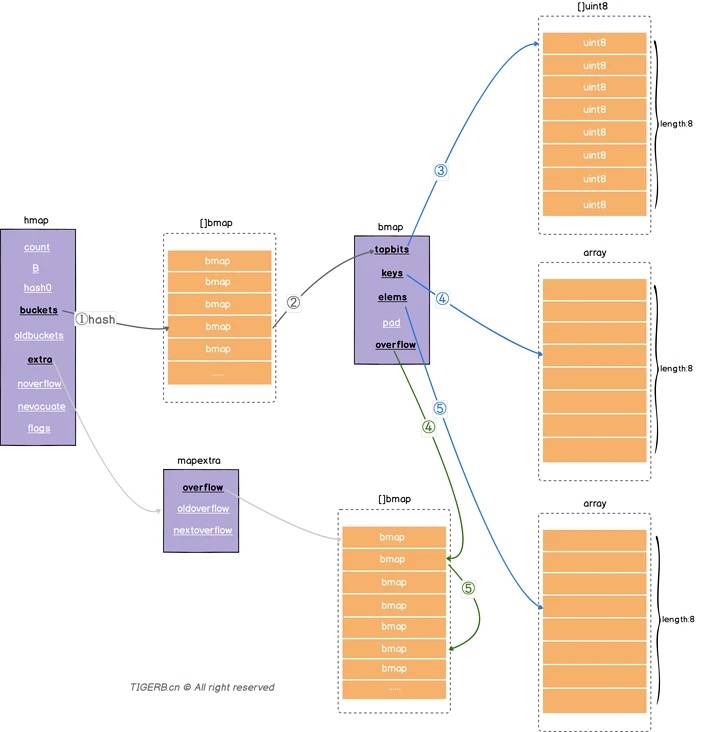

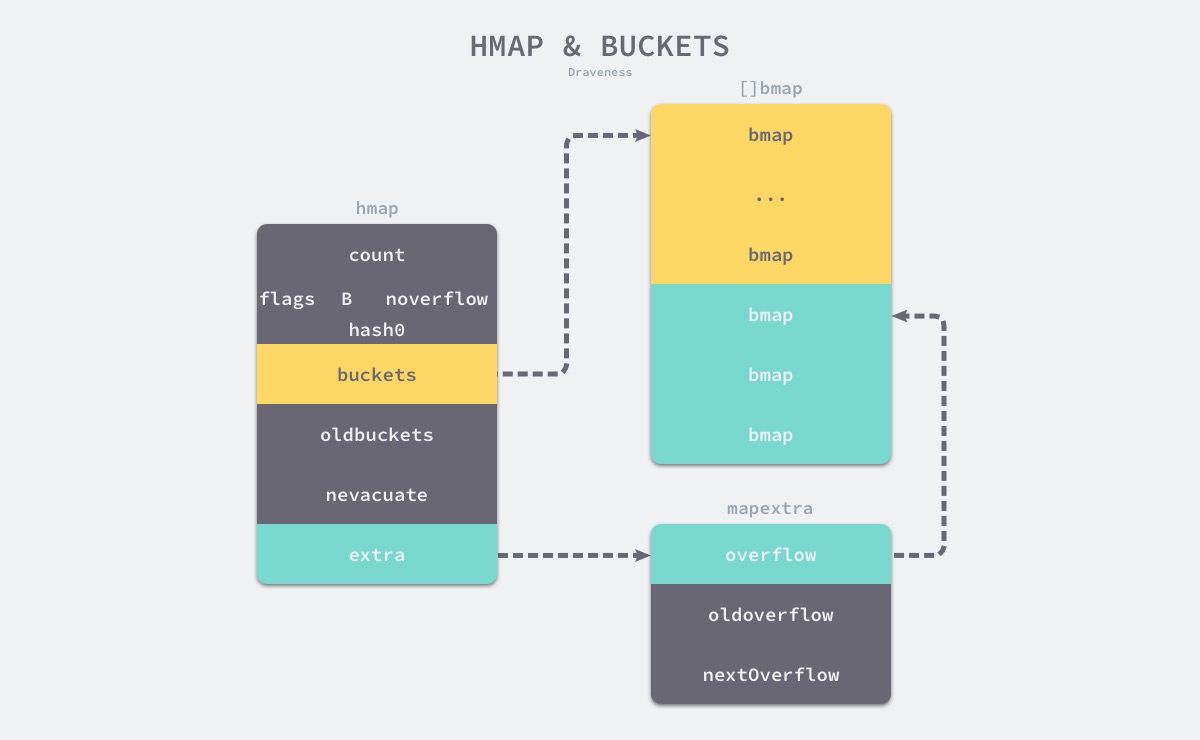

type hmap struct {

count int

flags uint8

B uint8 // 创建 2 ^ B bucket

noverflow uint16 // overflow 的 bucket 近似数

hash0 uint32

buckets unsafe.Pointer // []bmap

oldbuckets unsafe.Poiner

nevacuate uintptr

extra *mapextra

}

// bucket

type bmap struct {

tophash [8]uint8

keys [8]keytype

values [8]valuetype

pad uintptr

overflow uintptr // next bmap

}

|

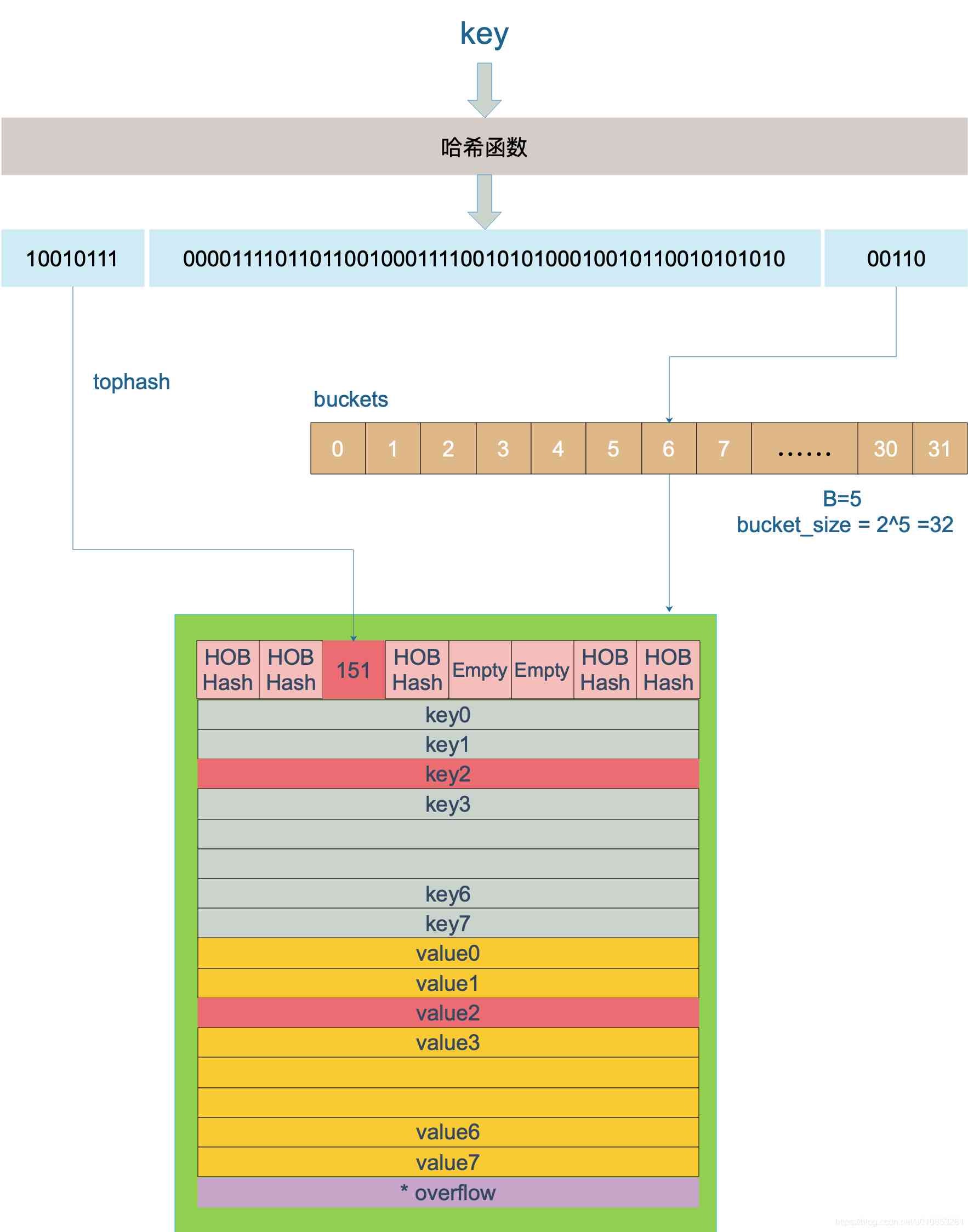

hash fun

64bit:

- last 2^b bit code to index bucket

- first 8 bit code to index bmap.array

1

2

|

if cpu support aes instruction: hash with aes

use memhash

|

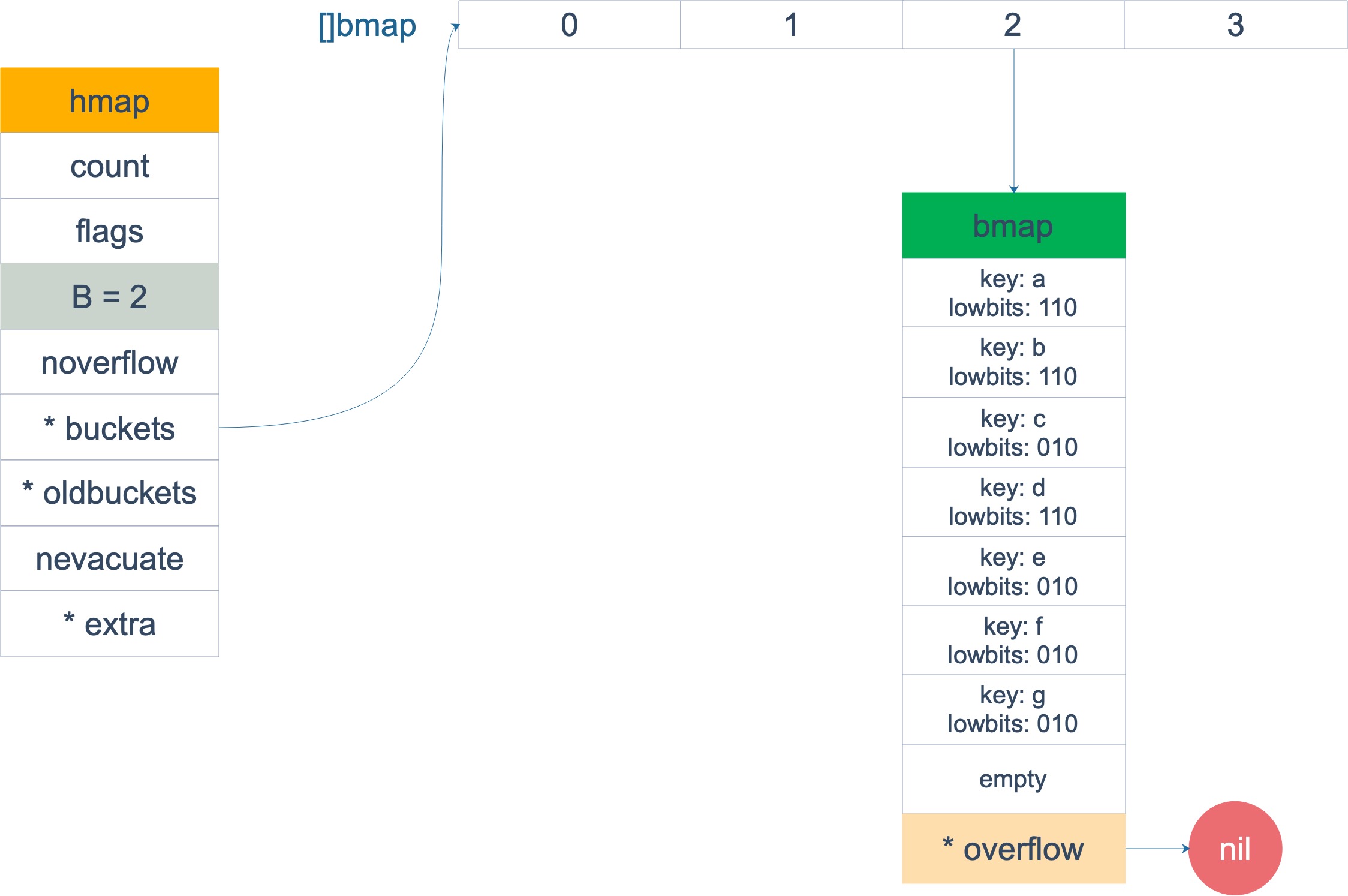

overflow

how:

- linker node contain a array,

- 提前分配好 extra []bmap

access a key

- 遍历数组和overflow

- compare tophash and key

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

for {

// 遍历 bucket 的 8 个位置

for i := uintptr(0); i < bucketCnt; i++ {

// tophash 不匹配,继续

if b.tophash[i] != top {

continue

}

// tophash 匹配,定位到 key 的位置

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

// key 是指针

if t.indirectkey {

// 解引用

k = *((*unsafe.Pointer)(k))

}

// 如果 key 相等

if alg.equal(key, k) {

// 定位到 value 的位置

v := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.valuesize))

// value 解引用

if t.indirectvalue {

v = *((*unsafe.Pointer)(v))

}

return v

}

}

// bucket 找完(还没找到),继续到 overflow bucket 里找

b = b.overflow(t)

// overflow bucket 也找完了,说明没有目标 key

// 返回零值

if b == nil {

return unsafe.Pointer(&zeroVal[0])

}

}

|

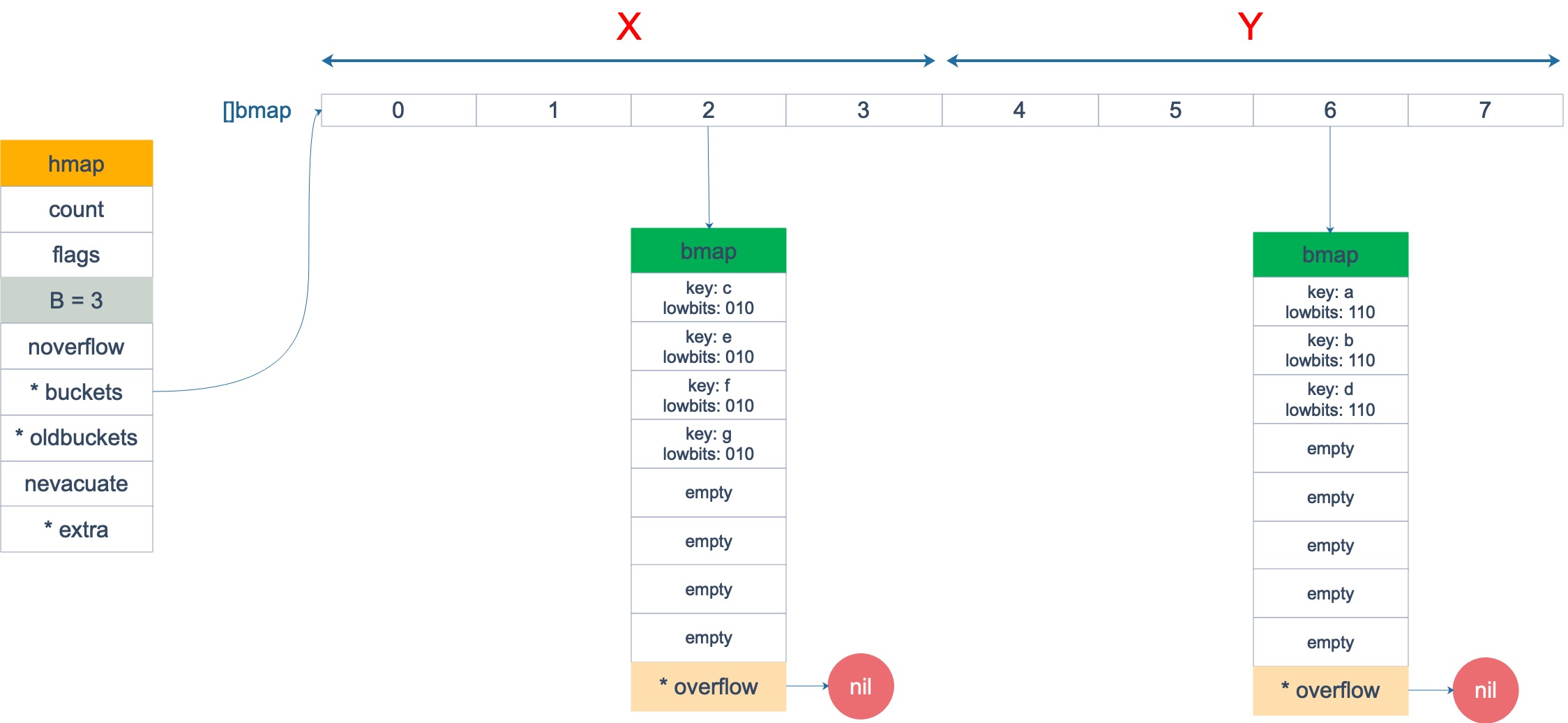

rehash

what: 为了防止查询效率退化(too many overflow),

- 增加bucket ,

- 删除过多的overflow

factor:

1

2

3

|

totalKeys/totalBuckt>=6.5

overflowBucket >= totalbuckets // increase then delete

|

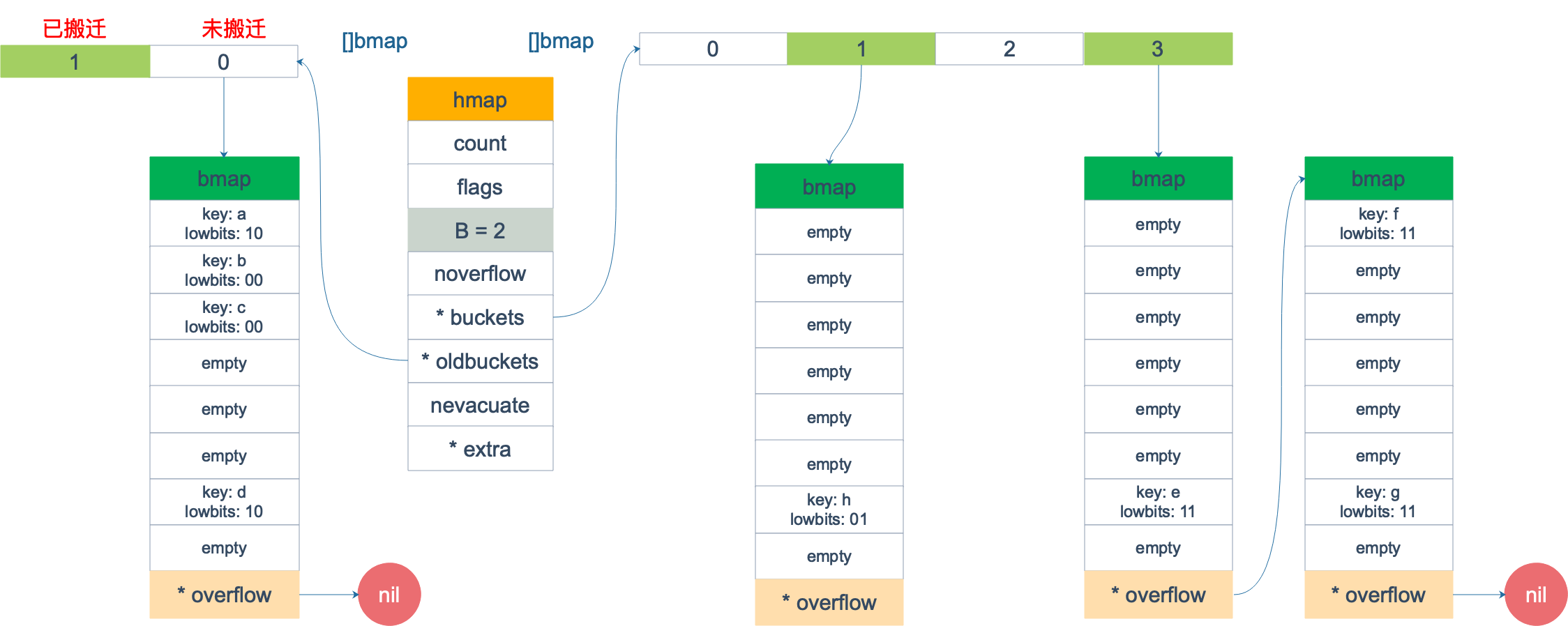

how:

- allocate new bucket

- 渐进式迁移,在更新或者删除触发变迁动作

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

func hashGrow(t *maptype, h *hmap) {

// B+1 相当于是原来 2 倍的空间

bigger := uint8(1)

// 对应条件 2

if !overLoadFactor(int64(h.count), h.B) {

// 进行等量的内存扩容,所以 B 不变

bigger = 0

h.flags |= sameSizeGrow

}

// 将老 buckets 挂到 buckets 上

oldbuckets := h.buckets

// 申请新的 buckets 空间

newbuckets, nextOverflow := makeBucketArray(t, h.B+bigger)

flags := h.flags &^ (iterator | oldIterator)

if h.flags&iterator != 0 {

flags |= oldIterator

}

// 提交 grow 的动作

h.B += bigger

h.flags = flags

h.oldbuckets = oldbuckets

h.buckets = newbuckets

// 搬迁进度为 0

h.nevacuate = 0

// overflow buckets 数为 0

h.noverflow = 0

// ……

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

func mapdelete(t *maptype, h *hmap, key unsafe.Pointer) {

.....

bucket := hash & bucketMask(h.B)

if h.growing() {

growWork(t, h, bucket)

}

}

func growWork(t *maptype, h *hmap, bucket uintptr) {

// 搬迁正在使用的旧 bucket

evacuate(t, h, bucket&h.oldbucketmask())

// 再搬迁一个 bucket,以加快搬迁进程

if h.growing() {

evacuate(t, h, h.nevacuate)

}

}

func (h *hmap) growing() bool {

return h.oldbuckets != nil

}

|

too many overflow:

factor > 6.5

factor: len(maps)/cap(maps)=6.5; total keys/ total bucket

factor: len(maps)/cap(maps)=6.5, 大致使用65%的bucket容量

why 6.5: 空间和时间(查询效率)权衡,

> 6.5: 冲突太多

< 6.5: 浪费空间

data: 20% overflow, check 4.5 to look up ;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

// Picking loadFactor: too large and we have lots of overflow

// buckets, too small and we waste a lot of space. I wrote

// a simple program to check some stats for different loads:

// (64-bit, 8 byte keys and values)

// loadFactor %overflow bytes/entry hitprobe missprobe

// 4.00 2.13 20.77 3.00 4.00

// 4.50 4.05 17.30 3.25 4.50

// 5.00 6.85 14.77 3.50 5.00

// 5.50 10.55 12.94 3.75 5.50

// 6.00 15.27 11.67 4.00 6.00

// 6.50 20.90 10.79 4.25 6.50

// 7.00 27.14 10.15 4.50 7.00

// 7.50 34.03 9.73 4.75 7.50

// 8.00 41.10 9.40 5.00 8.00

//

// %overflow = percentage of buckets which have an overflow bucket

// bytes/entry = overhead bytes used per key/value pair

// hitprobe = # of entries to check when looking up a present key

// missprobe = # of entries to check when looking up an absent key

|

how:

- bucket * 2

- 重新计算 index;

- lazy move: 当发生(删除,修改,插入) , 一次移动一个bucket

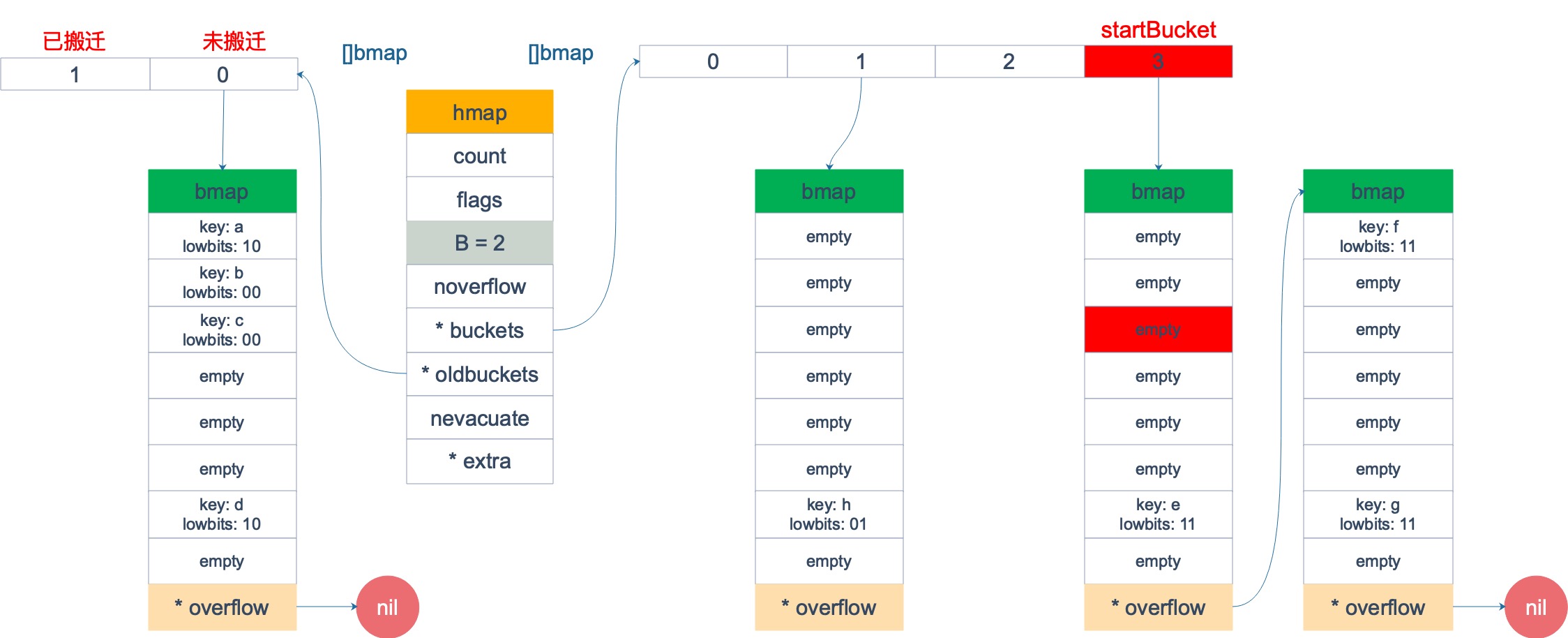

遍历过程以及无序

traverse

generate random start bucket index and offset entry index

1

2

3

4

5

6

7

8

9

|

r := uintptr(fastrand())

if h.B > 31-bucketCntBits {

r += uintptr(fastrand()) << 31

}

// 从哪个 bucket 开始遍历

it.startBucket = r & (uintptr(1)<<h.B - 1)

// 从 bucket 的哪个 key 开始遍历

it.offset = uint8(r >> h.B & (bucketCnt - 1))

|

- 开始遍历, 如果 in 迁移 state, 检测对应的老bucket 是否迁移过

- 未迁移, 则遍历老bucket

unorder

why:

- map不稳定结构,无法保证一致的顺序,rehash后key的顺序就会改变

- go在遍历开始生成随机的遍历起始位置,为避免给新手程序员误解